Why Now

Security teams are discovering that LLMs expand the attack surface faster than they reduce toil. In 2025, 74% of organizations reported an AI-related breach in 2024, up from 67% a year prior—proof that model risk is now a board topic, not a lab curiosity. (HiddenLayer | Security for AI)

Executive Summary

- •Treat LLMs as integrations with agency, not just APIs: they read, write, call tools, and leak. Map abuse to MITRE ATT&CK/ATLAS from day one. (MITRE ATT&CK)

- •Anchor controls in standards you already use: NIST CSF 2.0, NIST SSDF (+ the GenAI community profile), ISO/IEC 27001, and IEC 62443 where OT is in play. (NIST Publications)

- •Red-team methods must explicitly test prompt injection, data exfiltration via tools, training data disclosure, and supply-chain paths; use OWASP LLM Top 10 (2025) for coverage. (OWASP Gen AI Security Project)

- •Regulators are raising the floor: CISA/NCSC joint guidance (Apr 2024) on deploying AI securely; CISA/NSA AI data security (May 2025); EU DORA (applies Jan 17, 2025); NIS2 (transposed Oct 17, 2024). (CISA)

- •Case studies show live risks: Hugging Face Spaces token breach (May–Jun 2024) and Copilot red-teaming at Black Hat 2024 demonstrate prompt-abuse and secret exposure in the wild. (TechCrunch)

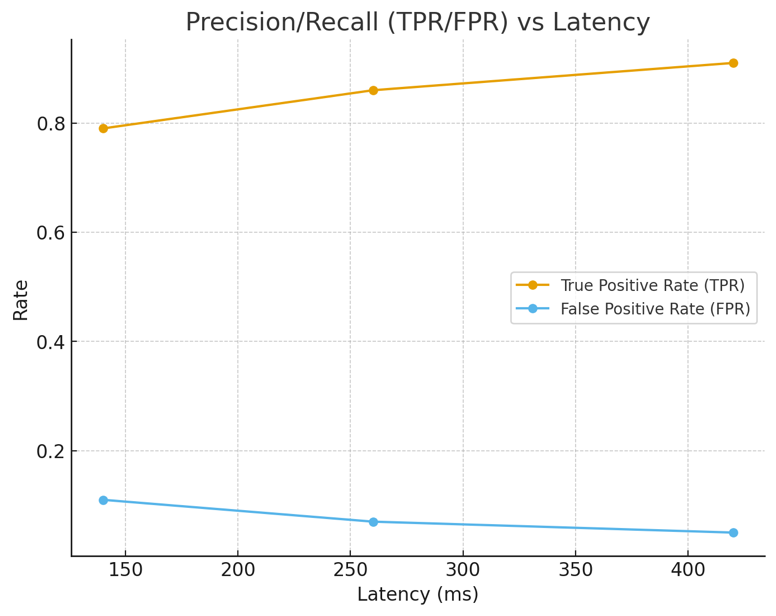

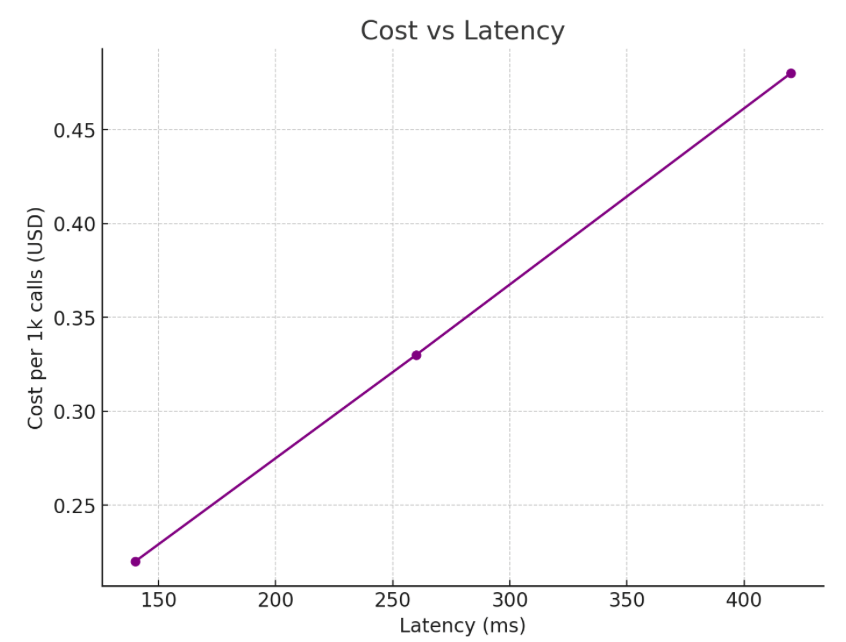

- •Economics: model risk quant fits neatly into FAIR: track TPR/FPR for detections, MTTR, attack-surface deltas from control changes, and coverage % of the OWASP LLM Top 10.

1) Threat & Abuse Cases

Prompt Injection & Jailbreaking (ATLAS tactics)

Attackers embed hidden or obfuscated instructions to override guardrails, pivot tools, or exfiltrate with the model acting as an unwitting agent. New methods (e.g., "TokenBreak" tokenization evasion) show that even content filters can be sidestepped by tiny perturbations. (atlas.mitre.org)

Sensitive Data Disclosure / Model Leakage

Data and system prompts can be extracted via cleverly crafted queries, chained tools, or poisoned context. The OWASP LLM Top 10 (2025) documents Prompt Injection, System Prompt Leakage, and Excessive Agency as first-class risks. (OWASP Gen AI Security Project)

Supply-Chain Compromise

Incident: Hugging Face disclosed unauthorized access to Spaces secrets in late May 2024, a reminder that model repos and serving platforms are high-value targets. (TechCrunch)

Data Poisoning / Retrieval Poisoning

Seeding training or RAG corpora with malicious content to control downstream behaviors.

Model Theft / Extraction

Exfiltrating weights or reproducing capability via output-only attacks; track via ATLAS technique mappings and watermarking/provenance where feasible.

Business Impact Pathway

In identity and commerce contexts, model-driven UX cuts friction but can increase conversion-security tradeoffs: relaxing filters boosts conversions but raises downstream fraud if guardrails are brittle. Measure it.

2) Architecture & Controls

Control Baselines to Cite in Board Decks

- NIST CSF 2.0 — run AI through Govern, Identify, Protect, Detect, Respond, & Recover; extend asset inventories and risk registers to models, data pipelines, tools, and prompts.

- NIST SSDF + SP 800-218A (GenAI profile, Jul 26, 2024) — fold prompt/agent security, dataset lineage, and model release governance into your SDLC.

- ISO/IEC 27001 — ISMS controls for access, key management, logging, and supplier risk apply directly to model hosting and evaluation labs.

- IEC 62443 — if models touch OT/ICS, adopt zone/conduit segmentation and secure-development practices for model-enabled controllers.

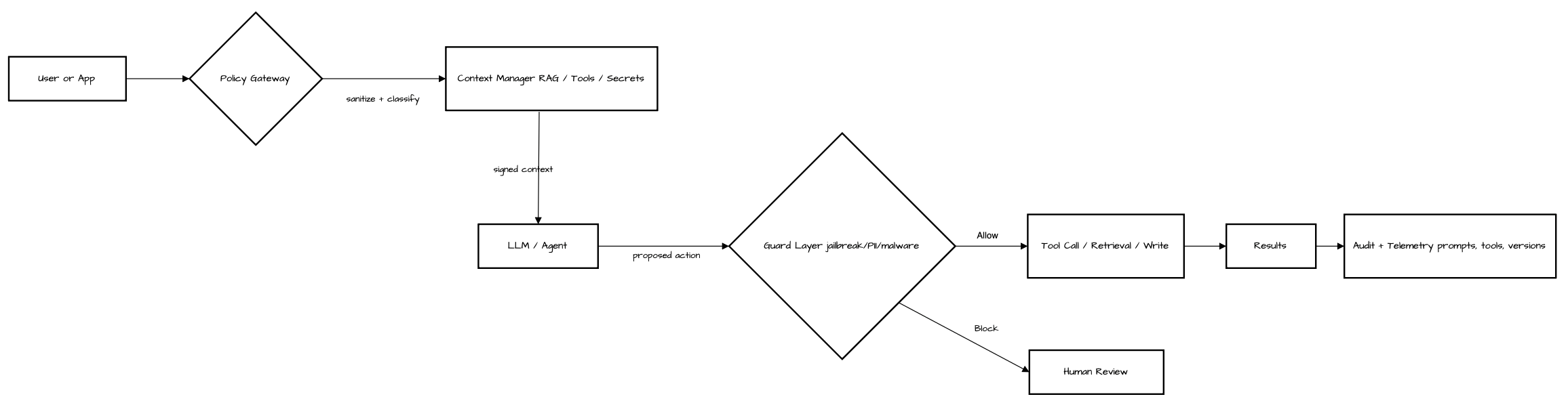

Reference Controls for LLM Systems

- Pre-ingress: sanitize/label external content; content provenance where supported; deny-by-default tool use.

- Policy layer: allowlist tools/APIs; rate-limit function calls; constrain output schemas; verify side effects.

- Context hygiene: no secrets in prompts; encrypt/vector stores; signed context with integrity checks.

- Guard models: run external classifiers (jailbreak, PII, malware) with explicit TPR/FPR targets; fail-closed on high risk.

- Observability: full audit trail of prompts, context, tool calls, outputs, decisions; link to incident response.

- Evaluation harnesses: seed suites with prompt-injection, exfil, poisoning, tool-loop scenarios; track coverage % across OWASP LLM Top 10. (OWASP Gen AI Security Project)

Regulatory Overlays

- U.S.: CISA/NCSC Joint Guidance (Apr 15, 2024) and CISA/NSA AI Data Security (May 22, 2025) lay out lifecycle controls (data provenance, integrity, and monitoring). (CISA)

- EU: DORA is live (Jan 17, 2025) for financial entities—expect ICT risk testing and incident reporting to include AI; NIS2 transposed Oct 17, 2024; use both to justify red-team cadence and supplier due diligence. (EIOPA)

- Japan/Singapore (illustrative "third market"): Japan AISI issued Red Teaming Method guidance (Sept 2024); Singapore's Model AI Governance Framework for GenAI launched May 30, 2024; both emphasize evaluation and transparency. (Morrison Foerster)

3) Case Studies (Named)

Hugging Face Spaces Secret Exposure (May–Jun 2024)

Unauthorized access to Spaces secrets forced key rotation—textbook supply-chain blast radius. If your models or agents depend on third-party hosting, treat them as critical suppliers with attestation and monitoring. (TechCrunch)

Microsoft Copilot Red-Team Demo (Black Hat 2024)

Researchers showed automated phishing and data extraction by abusing Copilot's integrations, underscoring the risk of agentic LLMs with live permissions. (WIRED)

HiddenLayer Field Data (2025)

Rise in organizations reporting AI breaches and under-reporting behaviors—useful to calibrate breach likelihood and detection assumptions in your FAIR models. (HiddenLayer | Security for AI)

4) Economics — A FAIR-Style View

| Scenario (per year) | Frequency (λ) | Loss Magnitude (P90) | Control Change | Δ Attack Surface | Notes |

|---|---|---|---|---|---|

| Prompt-injection leads to tool abuse (no PII) | 6 | $150k | Deny-by-default tools + output schema checks | −45% | Cuts agentic blast radius |

| Retrieval poisoning → bad decisions | 2 | $500k | Signed sources + retrieval allowlist | −35% | Quality gates improve TPR |

| Secrets in prompts leak via logs | 1 | $1.2M | Secret-scanning & redaction + vault use | −60% | Low cost / high benefit |

| Model supplier compromise | 0.5 | $3.0M | SBOM/VEX + attested builds + escrow | −30% | Slower MTTR without attestation |

| Data exfil via tool loop | 1 | $900k | Tool rate-limits, off-band human approval | −40% | Detect via tool-call anomalies |

Track detection TPR/FPR, MTTR, and the coverage % of abuse cases in your evaluation harness; price controls by Δ Expected Loss rather than narratives.

5) Governance: Policies, Audits, Cadence, Suppliers

- Policies. Add an AI Product Security Policy that references CSF 2.0, SSDF, and OWASP LLM Top 10; require threat modeling with ATLAS for each feature. (NIST Publications)

- Audit trails. Immutable logs for prompts/context/tool calls/output; attach model/version/hash; required for incident reconstruction and many regulatory regimes.

- Red-team cadence. At least quarterly or pre-release for major capability changes; include external and multilingual testing (see Singapore's focus on diverse red-teaming). (IAPP)

- Supplier risk. Demand SBOM/VEX, secure-by-design commitments (CISA), and incident-notification SLAs; verify hosting posture and secrets management. (CISA)

6) Implementation Playbook (30 / 60 / 90 Days)

Day 0–30: Stand Up Foundations

- Inventory models, prompts, tools, data stores; add to CSF asset register.

- Block secrets in prompts; enforce vault usage; enable prompt/log redaction.

- Ship an eval harness covering OWASP LLM Top 10; measure coverage % and baseline TPR/FPR for your classifiers. (OWASP Gen AI Security Project)

- Add kill switches and deny-by-default for all new tools.

Day 31–60: Exercise & Close Gaps

- Run a red team focused on prompt injection + exfil; include external content and multilingual attacks (Japan/Singapore playbooks are good references). (Morrison Foerster)

- Wire detections to SOAR; measure MTTR end-to-end (alert → contain → restore).

- Apply CISA/NCSC deployment guidance to production configurations, document in change records. (CISA)

Day 61–90: Prove Resilience & Plan Scale

- Pilot tool allowlists and human-in-the-loop for high-risk actions; quantify conversion impact vs. fraud reduction where applicable.

- Establish quarterly red-team cadence and supplier attestations (DORA/NIS2 contexts). (EIOPA)

- Executive review: show attack-surface deltas, TPR/FPR, MTTR, and updated Expected Loss.

7) Watchlist — Next 12 Months

- CISA expands Secure-by-Design for AI with more data-security and model-evaluation artifacts; agencies align. (CISA)

- OWASP GenAI publishes additional testing suites and updates Top 10 mappings. (OWASP Gen AI Security Project)

- EU DORA TLPT (threat-led penetration testing) scope clarifies AI-specific expectations. (digital-operational-resilience-act.com)

- Japan AISI and Singapore IMDA/AI Verify broaden multilingual red-team methods and reporting. (Morrison Foerster)

Figure 1 — Reference architecture: LLM controls

Figure 2 —Precision/recall vs latency and Cost vs latency

Takeaway: Tighter guardrails improve TPR and reduce FPR but add latency and cost; pick per use-case SLOs.

Fact-Check Table

| Claim | Source | Date | Confidence (1–5) |

|---|---|---|---|

| 74% of orgs reported an AI breach in 2024 | HiddenLayer report/press | Mar 4, 2025 | 4 |

| CSF 2.0 released Feb 26, 2024 | NIST CSWP | Feb 26, 2024 | 5 |

| CISA/NCSC issued AI deployment guidance Apr 15, 2024 | CISA alert | Apr 15, 2024 | 5 |

| CISA/NSA AI data-security best practices published | CISA/NSA info sheet | May 22, 2025 | 5 |

| DORA applied Jan 17, 2025 | EIOPA / EU | Jan 17, 2025 | 5 |

| NIS2 transposition deadline Oct 17, 2024 | EC Digital Strategy | Oct 17, 2024 | 5 |

| Hugging Face Spaces secrets exposure | TechCrunch / HF blog | May 31–Jun 4, 2024 | 5 |

| Copilot red-team demo at Black Hat 2024 | WIRED | Aug 2024 | 4 |

| OWASP LLM Top 10 names Prompt Injection, System Prompt Leakage, Excessive Agency | OWASP GenAI | 2025 | 5 |

Sources

- NIST. Cybersecurity Framework (CSF) 2.0 (Feb 26, 2024). (NIST Publications)

- NIST. SP 800-218A Secure Software Development Practices for Generative AI (Jul 26, 2024). (NIST Computer Security Resource Center)

- CISA & partners. Joint Guidance: Deploying AI Systems Securely (Apr 15, 2024). (CISA)

- CISA/NSA. Best Practices for Securing Data Used to Train & Operate AI Systems (May 22, 2025). (CISA)

- OWASP GenAI. Top 10 for LLM Applications (2025). (OWASP Gen AI Security Project)

- ENISA. Threat Landscape 2024. (Security Delta)

- EU. DORA (applies Jan 17, 2025). NIS2 transposition (Oct 17, 2024). (EIOPA)

- MITRE. ATLAS adversarial-ML knowledge base; ATT&CK. (atlas.mitre.org)

- Japan AISI. Red Teaming Method (Sept 2024); Singapore Model AI Governance Framework for GenAI(May 30, 2024). (Morrison Foerster)

- HiddenLayer. AI Threat Landscape 2024/2025 (breach stats). (21998286.fs1.hubspotusercontent-na1.net)

- Wired. Copilot exploitation demo at Black Hat 2024. (WIRED)

- TechCrunch. Hugging Face Spaces unauthorized access (May 31, 2024). (TechCrunch)