Executive Summary

- •Data fragmentation is rarely a "single integration" problem; it is mismatched meaning, inconsistent identity, and unreliable pipelines that break workflows and AI.

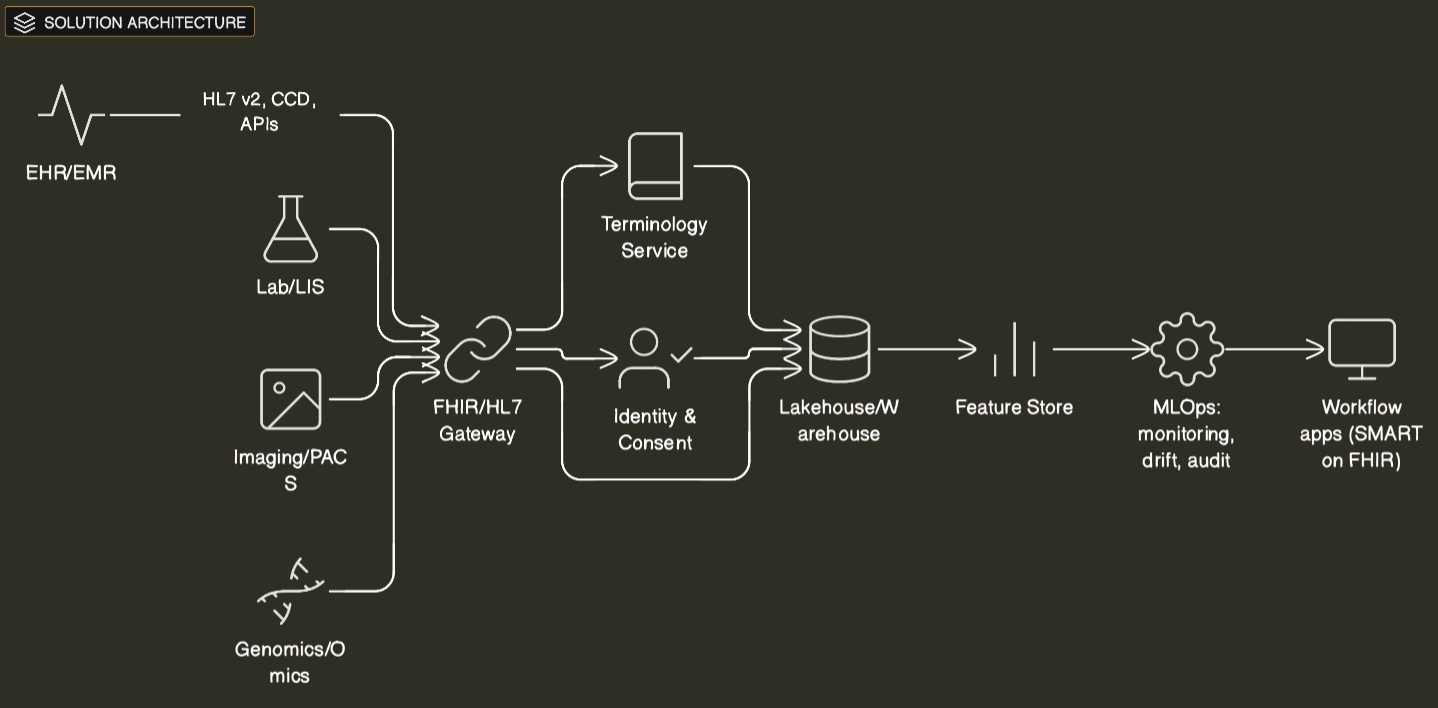

- •A workable unifying principle combines: a canonical model (FHIR + analytics model such as OMOP), a trust fabric (consent, lineage, de-identification), reliability engineering (SLAs), and workflow co-design.

- •Regulation is turning interoperability into deadlines: CMS-0057-F phases start Jan 1, 2026, with major API requirements primarily by Jan 1, 2027.

- •FHIR is spreading quickly, but version and profile fragmentation are becoming real execution risks.

- •Downtime is an interoperability tax; availability targets must be explicit from day one, not retrofitted.

- •Case signals in 2025: payer APIs (Da Vinci IGs) are being operationalized; TEFCA connectivity is scaling via major EHR networks; platforms are packaging domain architectures (FHIR ingestion, governance, NLP).

- •Executives should evaluate interoperability programs like product portfolios: workflow adoption + reliability + governance are as important as "data access."

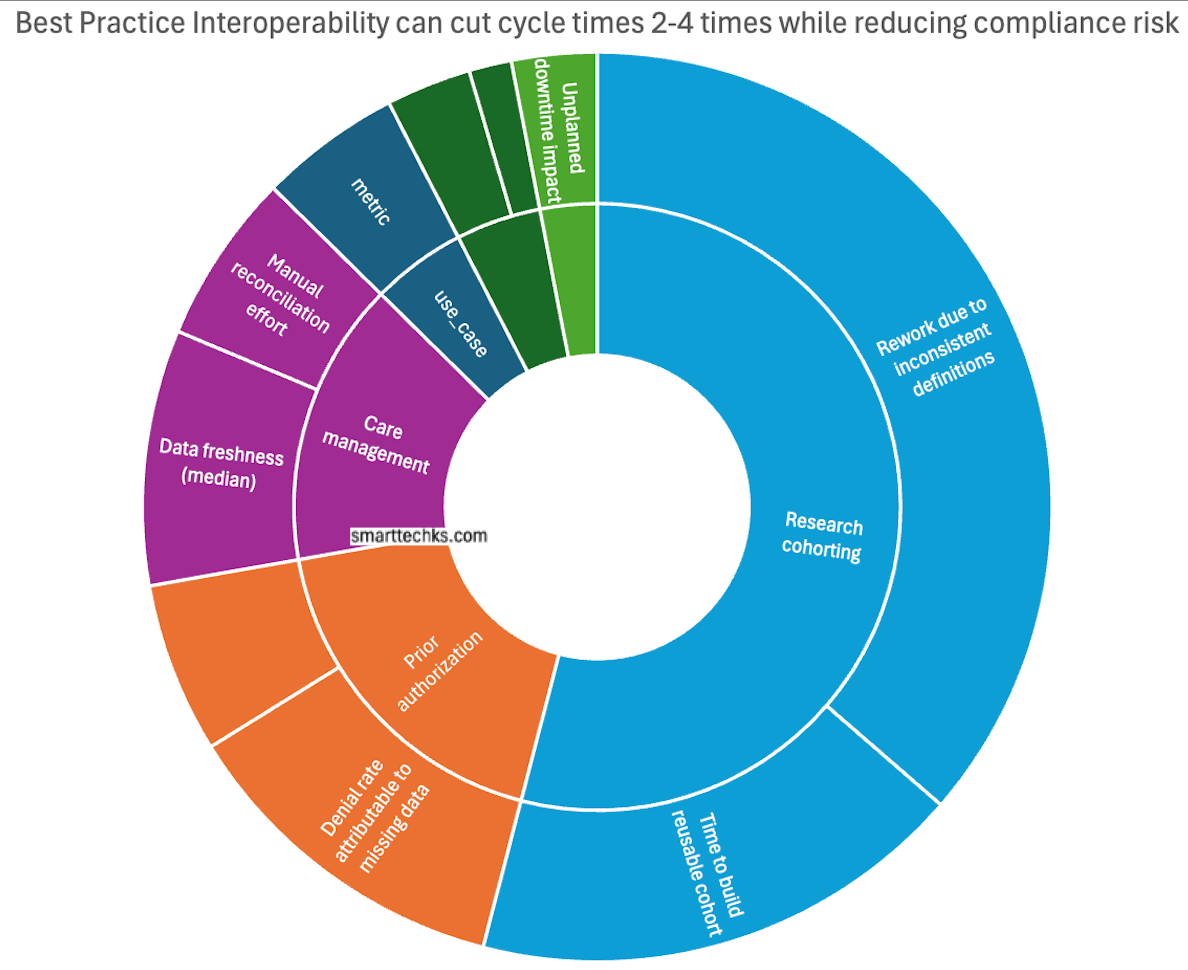

- •Investors should look for vendors that attach to measurable outcomes (cycle time, denial rate, cohort speed), not generic data plumbing.

Context and Why Now

Healthcare has always been data-rich and insight-poor. The difference now is that boards and investors are funding AI as a productivity and growth lever, while regulators are forcing data exchange into standardized interfaces. That combination changes the business case: fragmented data is no longer an inconvenience. It is a constraint on revenue, operations, research, and risk management.

Two market indicators are worth noting. First, industry market research continues to forecast rapid growth in AI in healthcare. Second, interoperability is itself a growing spend category: one market outlook estimates global healthcare interoperability solutions revenue at $3.418B (2023) and $8.568B (2030). Market estimates vary by methodology, but the direction is consistent: organizations are paying, increasingly, for the ability to reuse data across care, claims, research, and product development.

Regulation is the near-term forcing function. In the U.S., CMS states impacted payers must implement certain provisions by Jan 1, 2026, while they have until primarily Jan 1, 2027 to meet API requirements. CMS also provides detailed guidance on standards and implementation, including conditions for adopting updated standards and the implications of USCDI versioning (including an explicit USCDI v1 expiry date). In the EU, implementation of the AI Act is moving from legislative milestone to procurement and governance expectations. In the U.K., regulators are sharpening lifecycle expectations for AI-enabled medical software, including post-market surveillance requirements.

Reliability is the quiet third driver. When interoperability services fail, organizations pay twice: first in disruption (manual workarounds, delayed decisions), then in trust (clinician skepticism, patient dissatisfaction). Industry analysis continues to highlight the scale of downtime costs in healthcare IT. Even if the exact number differs for a given organization, the management implication is stable: data exchange must be engineered with clear SLAs and tested downtime procedures, or it becomes a fragile dependency for clinical and operational workflows.

Evidence-Backed Thesis: Interoperability is an Operating Model, Not an Integration Project

Most health systems still treat interoperability as a pipeline problem: connect system A to system B, normalize a few fields, land it in a warehouse or lake, and declare progress. That approach stalls because it ignores the hardest constraint: meaning and accountability. "Unified data" is not a file format. It is a set of decisions about what is equivalent, what is trustworthy, and who is responsible when it is wrong.

A Durable Unifying Principle Has Four Parts:

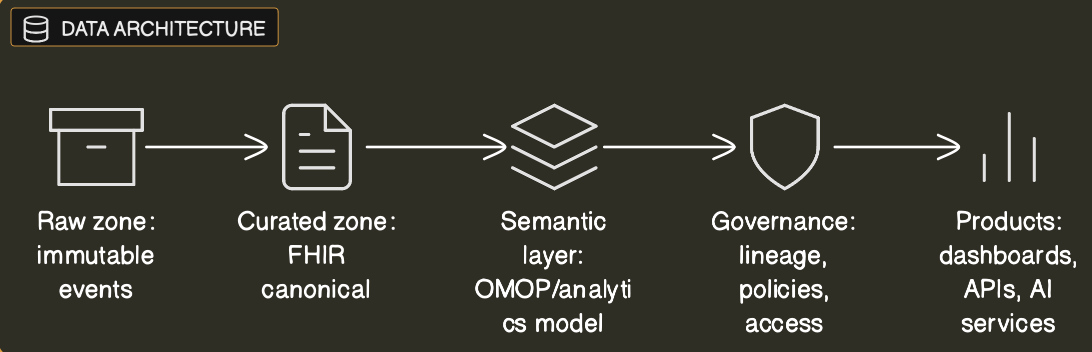

1. Canonical Models, by Use Case

Use FHIR for transactional exchange and workflow integration; use an analytics model (often OMOP or a close cousin) for population analytics, real-world evidence, and model training. The goal is not one model to rule them all; it is consistent mapping rules and versioning discipline across models.

2. A Trust Fabric for Reuse

Identity resolution, consent and authorization, de-identification where appropriate, lineage, and policy enforcement. Without this, AI programs become a compliance and reputational risk, and reuse collapses into one-off approvals.

3. Reliability Engineering as a Product Capability

SLAs for uptime, latency, data freshness, and completeness. Monitoring and incident response that treat data exchange as a tier-1 service, not as a back-office integration.

4. Workflow Co-Design

Data must show up inside the tools that people already use (most often the EHR) with minimal clicks, clear provenance, and the ability to write back when needed. Otherwise, "interoperable" data remains academically interesting and operationally ignored.

Data Fragmentation: Where the Real Failure Modes Hide

Executives often frame fragmentation as "many systems." Practitioners see sharper failure modes:

- Semantic drift: the same label means different things across departments (encounter types, normal ranges, problem lists).

- Identity collisions: imperfect matching across organizations, apps, and regions; errors propagate quickly once data is reused.

- Format mismatch: HL7 v2, PDFs, imaging, genomics, claims, and free-text notes each require different quality controls and governance.

- Workflow mismatch: data arrives late, lacks context, or cannot be written back into the EHR, so teams build spreadsheets and "shadow" processes.

FHIR helps — but it is not magic. A 2025 survey by HL7 International and Firely reported that 71% of respondents said FHIR is actively used in their country for at least a few use cases, and it also highlights growing challenges around version fragmentation. The executive takeaway: standard APIs reduce friction, but they increase the need for disciplined governance around profiles, versions, and semantics.

Mini-Case Studies (2025 Signals)

1) Compliance as a Forcing Function: CMS-0057-F Becomes a Modernization Window

CMS states impacted payers must implement certain provisions by Jan 1, 2026, with API requirements primarily by Jan 1, 2027. Organizations that treat this as a modernization window (rather than minimum compliance) are building reusable FHIR gateways, shared terminology services, and operational metrics that can be reused beyond compliance.

2) Network Effects: TEFCA Connectivity Scaling Through Major EHR Ecosystems

Becker's reported 17 Epic-using organizations went live with TEFCA participation in October 2025 and cited >1,000 hospitals/health systems connected through Epic. This is not just adoption news: it changes distribution, partnership strategy, and switching costs for interoperability-adjacent products.

3) Platform Packaging: "Domain Architectures" Replace Bespoke Integration

Databricks' healthcare and life sciences announcement explicitly positions a packaged Lakehouse approach with interoperability and NLP partner integrations. Whether a buyer chooses Databricks, Snowflake, or a hyperscaler, the strategic shift is the same: the market is moving from bespoke integration projects to repeatable domain patterns.

4) De-identification + NLP as Infrastructure, Not a Side Project

John Snow Labs' release notes emphasize stronger de-identification controls and robustness improvements for healthcare NLP pipelines. The management lesson is straightforward: if unstructured data is critical to ROI, de-identification and text processing must be governed infrastructure with clear policies, lineage, and auditability -- not per team scripts.

Economics: ROI Model, Costs, and Time-to-Value

A practical business case treats interoperability as a portfolio of use cases sharing fixed costs.

Fixed Costs

- Terminology and mapping services

- Identity and consent services

- Governance/lineage/audit logging

- Reliability engineering (SLA monitoring, incident response)

Variable Costs

- Per-source onboarding (mapping, validation, backfill)

- Per-use-case productization (workflow integration, model validation, training)

A useful rule of thumb: if a program cannot show reuse (multiple use cases drawing from the same governed datasets/services) within 6–9 months, it is likely to remain "platform in search of a problem."

| Cost / Benefit Driver | What It Includes | Typical Measurement |

|---|---|---|

| Fixed Foundation Costs | FHIR gateway, terminology, identity, governance, platform ops | Annual run rate; % reused |

| Variable Onboarding | Source mapping, adapters, validation, docs | Weeks per source; defect rate |

| Workflow Integration | EHR integration, UX, training, change management | Adoption rate; minutes saved |

| Risk Mitigation | Reduced incidents, audit readiness, resiliency | Downtime avoided; findings |

| Revenue/Productivity | Prior auth, RCM, scheduling, cohort speed | Cycle time; cost per claim |

Risk & Governance: Bias, Safety, Cybersecurity, Change Management

Three risks dominate board conversations:

- Bias and safety. Even when an AI tool is not a regulated medical device, the operational discipline matters: documentation, monitoring, drift management, and incident response. The FDA's Good Machine Learning Practice framing provides a practical anchor for lifecycle governance.

- Cybersecurity and resilience. Treat interoperability services as tier-1 systems with explicit SLAs and tested downtime procedures. Sector experience and analyses keep the costs of failure salient.

- Change management. Interoperability programs fail when they add clicks and cognitive load. The antidote is product management: clear ownership, a roadmap, and adoption metrics that drive iteration.

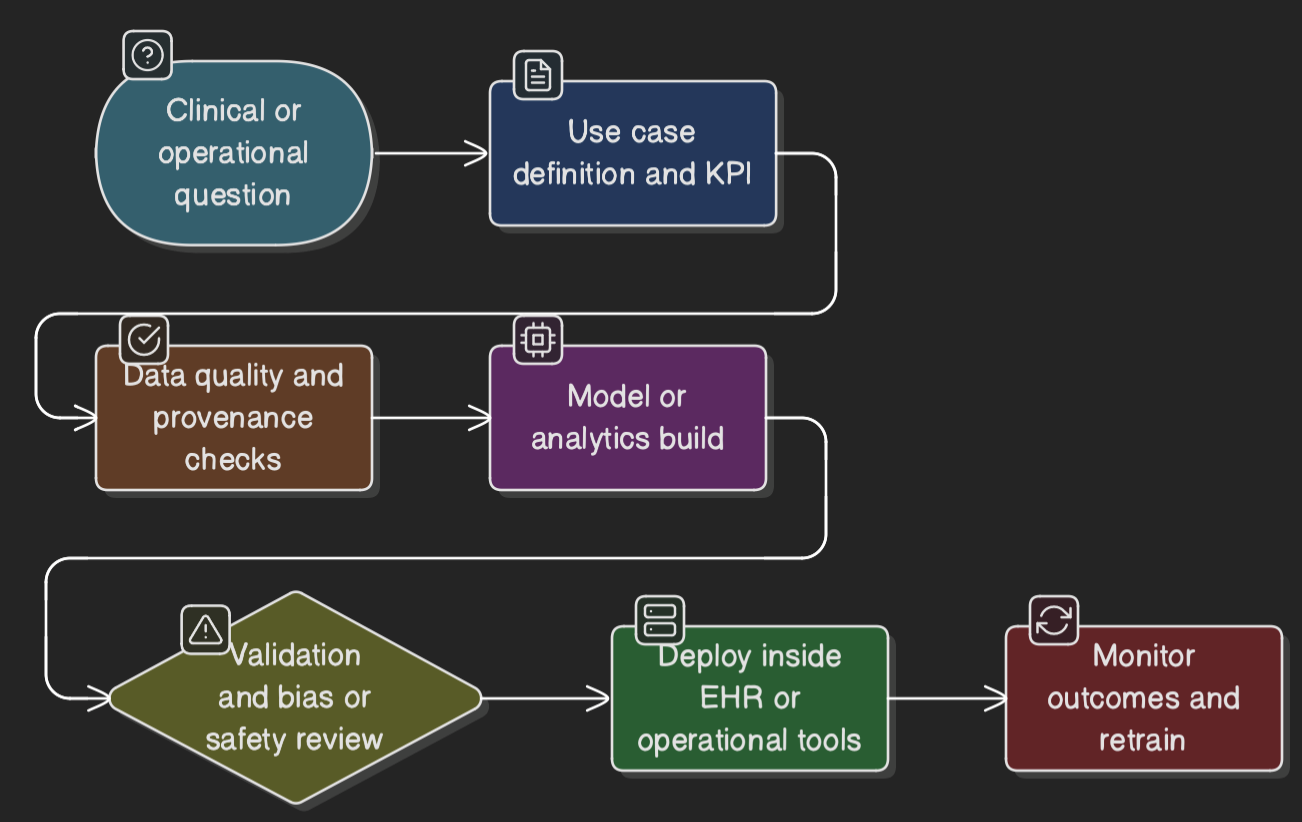

Implementation Playbook: Phased Plan and KPIs

Phase 0 (0–60 Days): Decide the Unifying Principle

- Choose canonical models by use case; define identity/consent ownership; set tier-1 SLAs and downtime procedures.

- KPIs: decision completed; top 20 data elements defined; SLAs approved.

Phase 1 (2–6 Months): Build the Trust Fabric and First Bridge

- Stand up terminology, lineage, auditable access; implement FHIR gateway; launch 1–2 workflow-anchored use cases.

- KPIs: time-to-onboard source; API latency/error rate; workflow adoption.

Phase 2 (6–12 Months): Scale Semantics and Reliability

- Expand mapping coverage; automate data quality checks; operationalize de-id and NLP where needed.

- KPIs: defect rate; governed datasets available; MTTR for data incidents.

Phase 3 (12–18 Months): Productize AI and Measure Portfolio ROI

- Move from "models" to "products" (monitoring, retraining policies, human controls). Stop low-adoption pilots quickly.

- KPIs: time request → production; sustained adoption; realized cycle time / cost reductions.

Solution Architecture Diagram

Process Flow Diagram

Data Architecture Diagram

Best Practice Interoperability can cut cycle times 2-4 times while reducing compliance risk

What to Watch Next (12 Months)

- Whether CMS-0057-F drives real workflow change or minimal compliance (watch production API performance).

- FHIR version convergence vs fragmentation.

- Whether platforms ship governance + reliability by default (lineage, policy, SLA monitoring).

- Procurement requirements for AI governance as EU/U.K. frameworks mature.

- The next major outage/cyber event as a real-world resilience test.

Sources

- CMS-0057-F: Final Rule on Interoperability and Prior Authorization (2025)

- HL7 International & Firely: FHIR Adoption Survey 2025

- Becker's Hospital Review: TEFCA Adoption Report (October 2025)

- Databricks Healthcare & Life Sciences Announcement (2025)

- John Snow Labs Release Notes: Healthcare NLP & De-identification (2025)

- Market Research: Global Healthcare Interoperability Solutions Forecast (2023-2030)

- FDA: Good Machine Learning Practice Guidance (2021-2025 updates)

- EU AI Act Implementation Guidance (2024-2025)